Zachary W. Huang

Reviewing Some Textbooks

March 22, 2024

At the current moment, I’m about to start my 6th term at Caltech (12 terms total, 3 per year). So after I finish my next term, I’ll be halfway through my entire undergraduate education, which is pretty crazy to me. It already feels like it’s going by quickly, and I know that it’ll only speed up.

Anyway, I had the idea to review some of the textbooks that I’ve used throughout my first (almost) two years at Caltech. This isn’t intended to be super serious :)

Calculus For Cranks - Nets Katz (Ma1a)

How much I actually read: 85%

Rating: 3/5

This is a pretty decent book if you want to learn about the theoretical foundations of single-variable calculus. However, it is definitely not a great first introduction to single-variable calculus (unless you’re a genius). Unfortunately, the latter is how it was used in Ma 1a, which every Caltech freshman must take (unless they place out, which is pretty difficult). Anyway, the textbook defines infinite sequences and uses them to derive limits, differentiability, (Riemann) integrability, and other calculus stuff. For some reason, the later chapters of the book start talking about complex analysis, which I’m not a big fan of. In the end, I don’t think I actually got much out of this book.

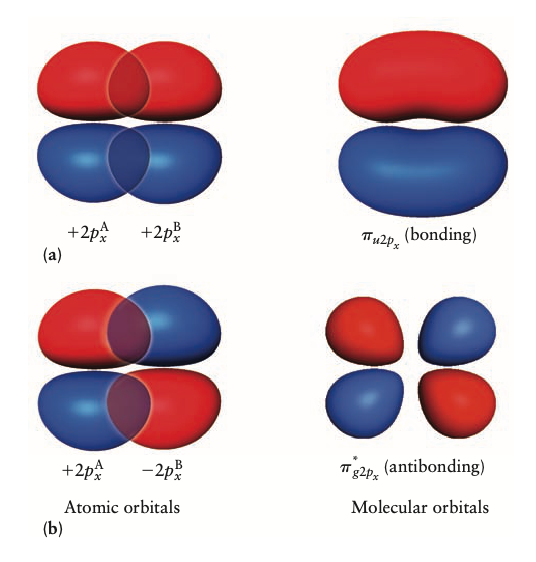

Principles of Modern Chemistry - Oxtoby et al. (Ch1a & Ch1b)

what is even going on???

what is even going on???How much I actually read: 1%

Rating: 2/5

Uh… there’s a reason why I’m not a chemistry major. Statistical mechanics was pretty cool tho

The Mechanical Universe - Frautschi et al. (Ph1a)

How much I actually read: 25%

Rating: 3.5/5

Basically AP Physics 1/2 material plus orbital mechanics with fun illustrations. To be honest, I don’t really remember anything from this textbook (except for the boat problem).

⭐ Special Relativity - Helliwell (Ph1b)

What an amazing cover

What an amazing coverHow much I actually read: 80%

Rating: 4.5/5

This is actually a really good textbook. It derives basically all the principles of special relativity with only the Pythagorean Theorem and highschool-level algebra. The content is genuinely really cool, yet it remains accessible - it makes you feel like you could have discovered the topic yourself. If I could re-learn this material, I definitely would.

Electricity and Magnetism - Purcell (Ph1b & Ph1c)

How much I actually read: 60%

Rating: 4/5

This is also a really good textbook. Pretty much my entire understanding of electricity and magnetism (which isn’t much, to be fair) stems from this book. It goes over all the principles of static/dynamic electric/magnetic fields before tying everything together with Maxwell’s equations (and a discussion of what happens to these fields in matter). Some parts are hard to understand, but I feel like that comes with the territory. I think the math is at the right level of difficulty, and in general the book definitely helps you build your intuition. If you want to understand the physics underlying our world, this textbook should be a go-to.

⭐ Introduction to the Theory of Computation - Sipser (CS21)

How much I actually read: 70%

Rating: 5/5

I think this is genuinely the best computer science textbook I’ve ever read. I didn’t read all of it because most of the same material was covered in lecture but I definitely would have. The book goes over computability (regular languages, context-free languages, finite automata, Turing machines), decidability (halting problem, reductions), and complexity theory (Big O notation, P vs NP, NP-completeness, etc). It’s really well written, easy to understand, and has just the right amount of mathematical formalism. Even if you’re not interested in theoretical CS, this textbook is still worth reading.

Linear Algebra Done Wrong - Treil (Ma1b)

No cover art :(

No cover art :(How much I actually read: 60%

Rating: 4/5

It’s a decent introduction to linear algebra. The book is a bit more rigorous/theoretical than you might expect, but that’s a positive in my opinion.

Calculus on Manifolds - Spivak (Ma1c)

How much I actually read: 20%

Rating: 1.5/5

I read the first 2 chapters and knew I was not going to learn anything. Even the Wikipedia page says “the text is also well known for its laconic style, lack of motivating examples, and frequent omission of non-obvious steps and arguments.”

A Visual Introduction to Differential Forms and Calculus on Manifolds - Fortney (Ma1c)

How much I actually read: 35%

Rating: 3.5/5

Made more sense than the lecture notes, at least.

⭐ Learning From Data - Mostafa (CS156a)

How much I actually read: 80%

Rating: 4.5/5

This is a great introduction to machine learning. It’s not a PyTorch tutorial — instead, it provides the theoretical foundation/motivation for algorithms that can “learn”. It provides solid grounding for concepts like noise, overfitting, regularization, and cross-validation, and it teaches principles that are applicable to machine learning as a whole (generalization error, data snooping, etc). I definitely won’t forget the lessons from this textbook.

Introduction to Probability - Blitzstein & Hwang (Ma3)

How much I actually read: 10%

Rating: 4/5

Seems like a solid introduction to probability/statistics.

Conclusion

While writing this post, I was going back over all of the material from my past terms, and I honestly didn’t realize just how much I’ve done. Every 10-week term here is just a ton of work, and all of my past terms are starting to blur together. However, I’m still glad I’ve taken all the classes that I have, and I’m looking forward to learning even more.